Dynamic Workflows in Flyte

Run-time dependency is an important consideration when building machine learning or data processing pipelines. Consider a case where you want to query your database x number of times where x can be resolved only at run-time. Here, looping is the ultimate solution (manually writing by hand is unfeasible!). When there’s a loop in the picture, a machine learning or data processing job needs to pick up an unknown variable at run-time and then build a pipeline based on the variable’s value. This process isn’t static and has to happen on the fly at run-time.

Dynamic workflows in Flyte intend to solve this problem for the users.

Introduction

Before we get an essence of how a dynamic workflow works, let’s understand the two essential components of Flyte, namely task and workflow. A task is the primary building block of any pipeline in Flyte. A collection of tasks in a specified order constitutes a workflow. A workflow in Flyte is represented using a directed acyclic graph (DAG).

A workflow can either be static or dynamic. A workflow is static when its DAG structure is known ahead of time, whereas a workflow is dynamic when the DAG structure is computed at run-time, potentially using the run-time inputs given to a task.

Inducing dynamism into data processing or machine learning workflows is a beneficial aspect in Flyte if:

- the workflow depends on the run-time variables, or

- a plan of execution (or) workflow within a task is required. This helps keep track of data lineage encapsulated in the dynamic workflow within the DAG, which isn’t the case with a task.

Note: A task is a general Python function that doesn't track the data flow within it.

Besides, a dynamic workflow can also help in building simpler pipelines. Vaguely, it provides the flexibility to mold workflows according to the project’s needs, which may not be possible with static workflows.

When to Use Dynamic Workflows?

- If a dynamic modification is required in the code logic—determining the number of training regions, programmatically stopping the training if the error surges, introducing validation steps dynamically, data-parallel and sharded training, etc.

- During feature extraction, if there’s a need to decide on the parameters dynamically.

- To build an AutoML pipeline.

- To tune the hyperparameters dynamically while the pipeline is in progress.

How Flyte Handles Dynamic Workflows

Flyte can combine the typically static nature of DAGs with the dynamic nature of workflows. With a dynamic workflow, just as a typical workflow, users can perform any arbitrary computation by consuming inputs and producing the outputs. It is capable of yielding a workflow, where the instances or the children (tasks/sub-workflows) can programmatically be constructed using inputs at execution (run) time. Hence, it is called “dynamic workflow”.

A dynamic workflow is modeled in the backend as a task, but at the execution (run) time, the function body is run to produce a workflow.

Here’s an example that “explores the classification accuracy of the KNN algorithm with k values ranging from two to seven”.

Let's first import the libraries.

Next, we define <span class=“code-inline”>tasks</span> to fetch the dataset and build models with different k values.

We now define a <span class=“code-inline”>dynamic workflow</span> that loops through the models and computes the cross-validation score. Moreover, we define a helper <span class=“code-inline”>task</span> that returns the appropriate score for every model.

Finally, we define a workflow that calls the above-mentioned tasks and dynamic workflow.

When the code is run locally, the following output is shown (the output is arbitrary):

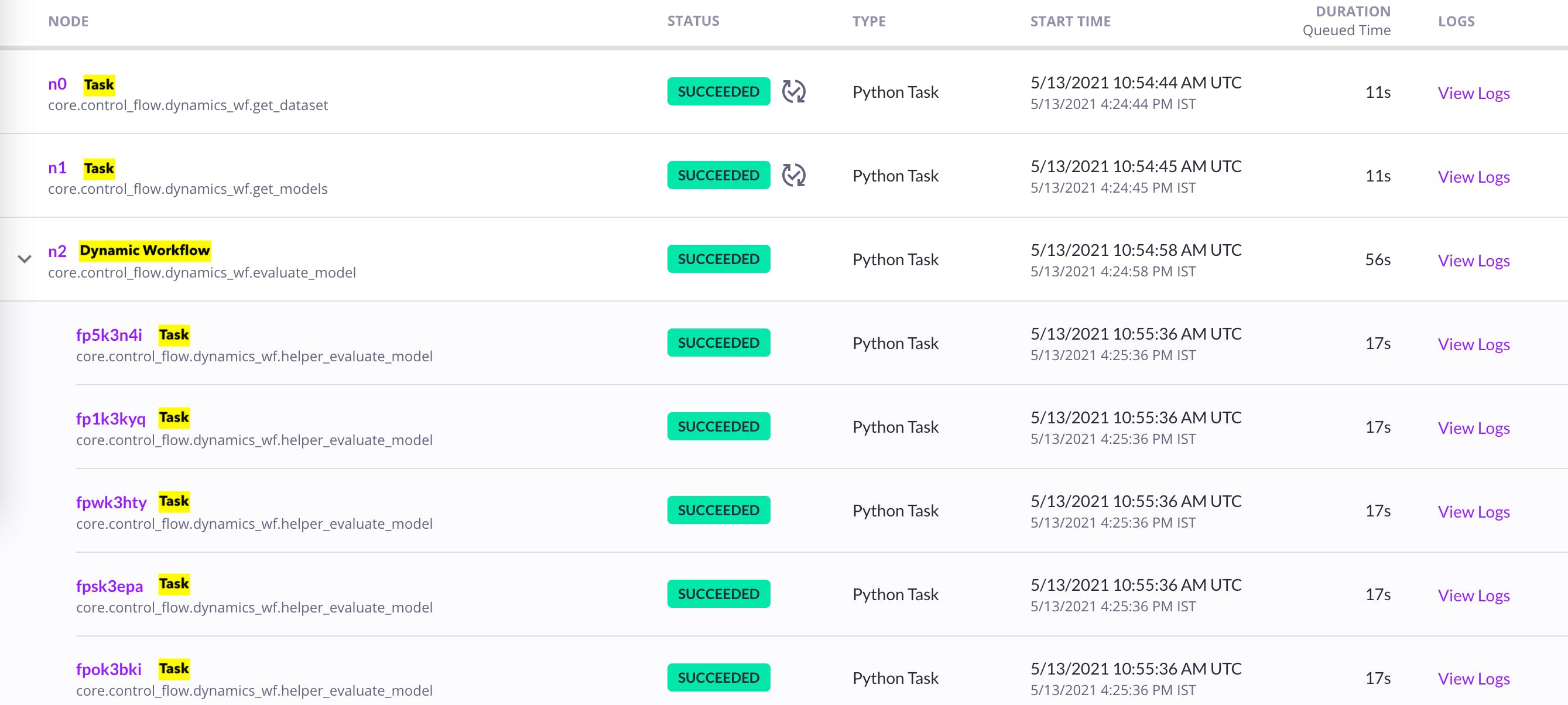

<span class=“code-inline”>get_dataset</span>, <span class=“code-inline”>get_models</span>, <span class=“code-inline”>helper_evaluate_model</span> are tasks, whereas <span class=“code-inline”>evaluate_model</span> is a dynamic workflow and <span class=“code-inline”>wf</span> is a workflow.

<span class=“code-inline”>evaluate_model</span> is dynamic because internally, it loops over a variable that’s known only at run-time. It also encapsulates a <span class=“code-inline”>helper_evaluate_model</span> task which gets called a specified number of times (depends on the number of KNN models).

If <span class=“code-inline”>evaluate_model</span> is given the <span class=“code-inline”>@workflow</span> decorator, the compilation fails ('Promise' object is not iterable) due to the inability to decide upon a static DAG.

Points to remember:

- A task runs at run-time, whereas a workflow runs at compile time. However, a dynamic workflow gets compiled at run-time and runs at run-time.

- When a task is called within a dynamic workflow (or simply, a workflow), it returns a Promise object. This object cannot be directly accessed within the Flyte entity. Nonetheless, if it needs to be accessed, pass it to a task or a dynamic workflow that unwraps its value.

- Values returned from a Python function (not a task) are accessible within a dynamic workflow (or simply, a workflow).

Here’s an animation depicting the data flow through Flyte's entities (task, workflow, dynamic workflow) for the above code:

To learn more about dynamic workflows, refer to the docs. There’s also a House Price Prediction example that you could refer to.

Conclusion

A Dynamic Workflow is a hybrid of a task and a workflow. It is useful when you have to decide on the parameters at run time dynamically.

A dynamic workflow, when combined with a map task, becomes an all-powerful combination. Dynamic workflow helps in spawning new instances (tasks/sub-workflows) within a workflow, and a map task spawns multiple inputs in a single task which leads to the creation of dynamically parallel pipelines. All of this can be implemented easily within Flyte.

We will talk about the map task in a follow-up post—stay tuned! ⭐️

Reference: machinelearningmastery.com/dynamic-ensemble..

Cover Image Credits: Photo by Julian Hochgesang on Unsplash.