Reproducing Liger Kernel Benchmarks on Phi3 Mini

Reproducibility is the cornerstone of science and engineering: Without it, we can’t reliably build on top of each other’s findings and work. The “reproducibility crisis” is well-known in many scientific fields, and machine learning is no exception: It’s important to run independent benchmarks that come from scientific and engineering advancements so that we can verify their correctness, generalizability, and reliability.

As one of the most active users of Flyte, the ML team at LinkedIn has recently moved all of their LLM training infrastructure to Flyte. They’ve recently published the Liger Kernels Python package, which is a collection of triton kernels that accelerate LLM training by increasing token throughput and reducing the memory footprint of models.

Union helps ML engineers build production-grade AI/ML applications, and we strive to support and amplify open source projects that make LLM training more accessible. The Liger Kernel project does this by allowing ML practitioners to train models more efficiently in both single-node and multi-node setups. In this blog post, we’ll reproduce the LinkedIn team’s findings using a scaled-down training setup – using the Phi3 3B parameter model and a single A100. We find similar throughput and memory efficiency gains in these training conditions.

Creating a reproducible experiment workflow

To reproduce the training conditions as closely as possible, we’ve adapted the benchmarking example in the official repo into a Flyte workflow. The full codebase can be found here. We used Union Serverless to power this test. Learn more about our Serverless offering here.

Benchmarking Metrics

First, we use the same metrics of interest as defined by the official repo example: token throughput and peak reserved memory.

Supervised Fine-tuning with `trl` and `wandb`

Then, we define a task that performs the training loop. In this training run, we’re going to use:

- The trl library’s `SFTTrainer` to do instruction fine-tuning on the alpaca dataset.

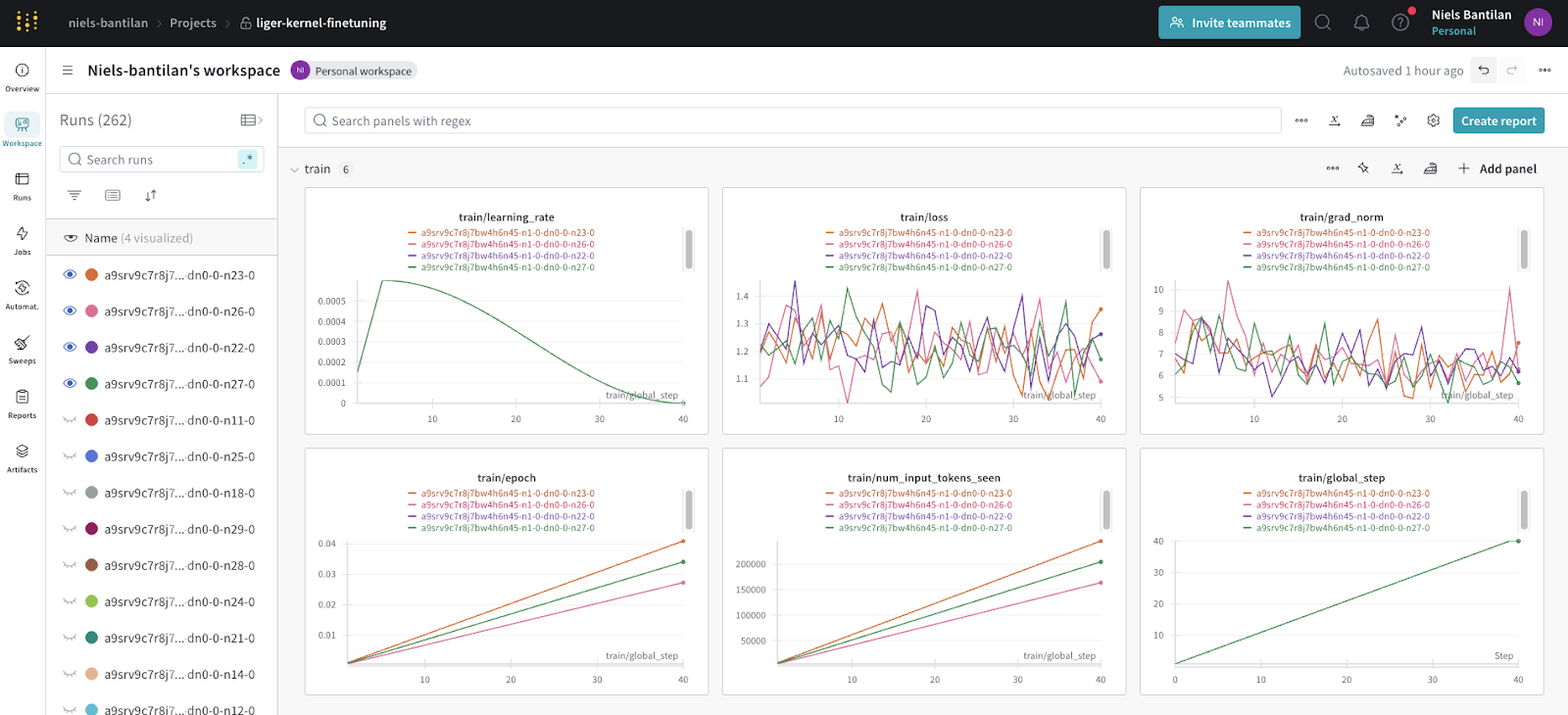

- The Weights and Biases flytekit plugin to automatically save training logs across experiment runs.

- An `A100` GPU to run each training run in our experiment.

The `@wandb_init` decorator will automatically create runs on the specific `project` and `entity` (which is typically your username) on your Weights and Biases account. This allows you to track training metrics as the experiments run on Union.

Parallelizing training runs with map tasks

To parallelize the experiment, we use a `map_task` wrapped inside a `@dynamic` workflow so that we can cache the results of the entire experiment. In the code below, we:

- Use `partial` to pre-load the `dataset_cache_dir` and `model_cache_dir` inputs into the `train_model` task.

- Wrap the partial task in a `map_task` to parallelize the experiment conditions specified in `training_args_list`.

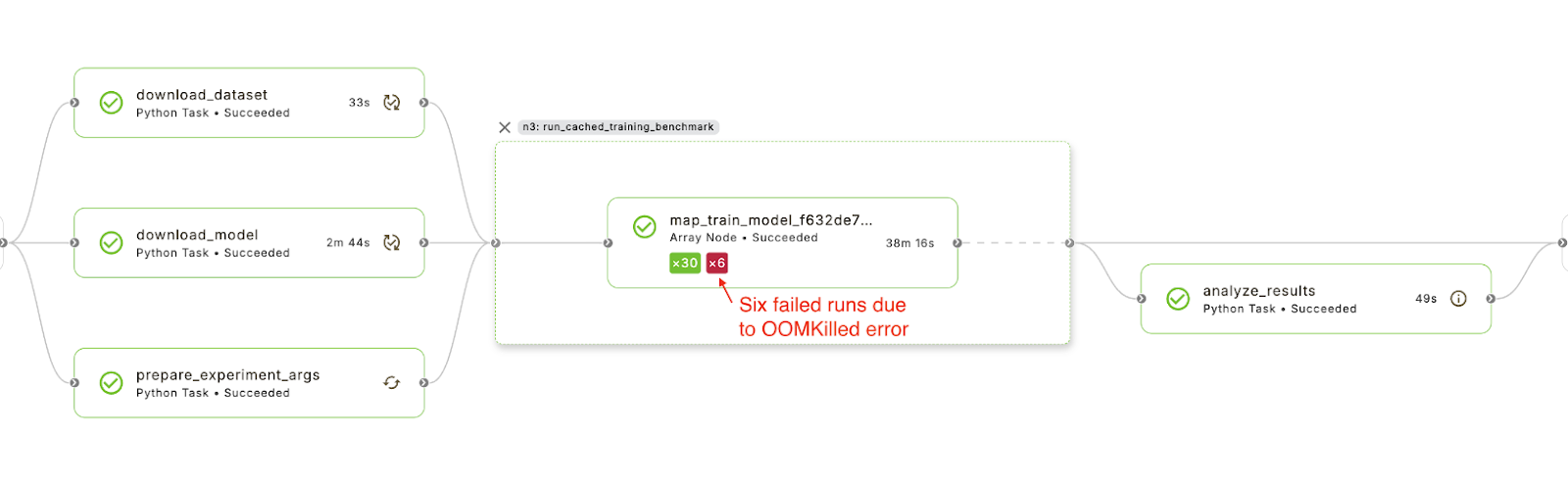

An important thing to note about the `map_task` is that we specify the `max_concurrency` to four so that we can control the number of `A100`s that are provisioned at any given time. We also expect that some of the experiment conditions will lead to `OOMKilled` (out-of-memory) errors, so we set `min_success_ratio` to `0.1` so that the map task can still succeed even if 90% of the subtasks fail. As you can see in the image of an example run below, six runs failed while executing the map task.

Visualizing a Report with Flyte Decks

To report the results of our experiment, we create a task to aggregate and analyze the log history of all of the training runs and produce a Flyte Deck.

Composing a benchmarking workflow

Finally, we encapsulate the different components of the pipeline into a Flyte workflow with the following inputs:

- `experiment_args` input specifies a mapping of all the different conditions we want to map over in our `map_task.`

- `training_args` contains the default training arguments that the `train_model` task uses to set up and run fine-tuning.

- `n_runs` specifies the number of repeats for each experimental condition so that we can get aggregate metrics.

To run the experiment, we specify the inputs to the `benchmarking_experiment` workflow as a yaml file:

We then pass this yaml file into the `union run` command to run the benchmarking experiment via the `--inputs-file` argument:

Analyzing the results

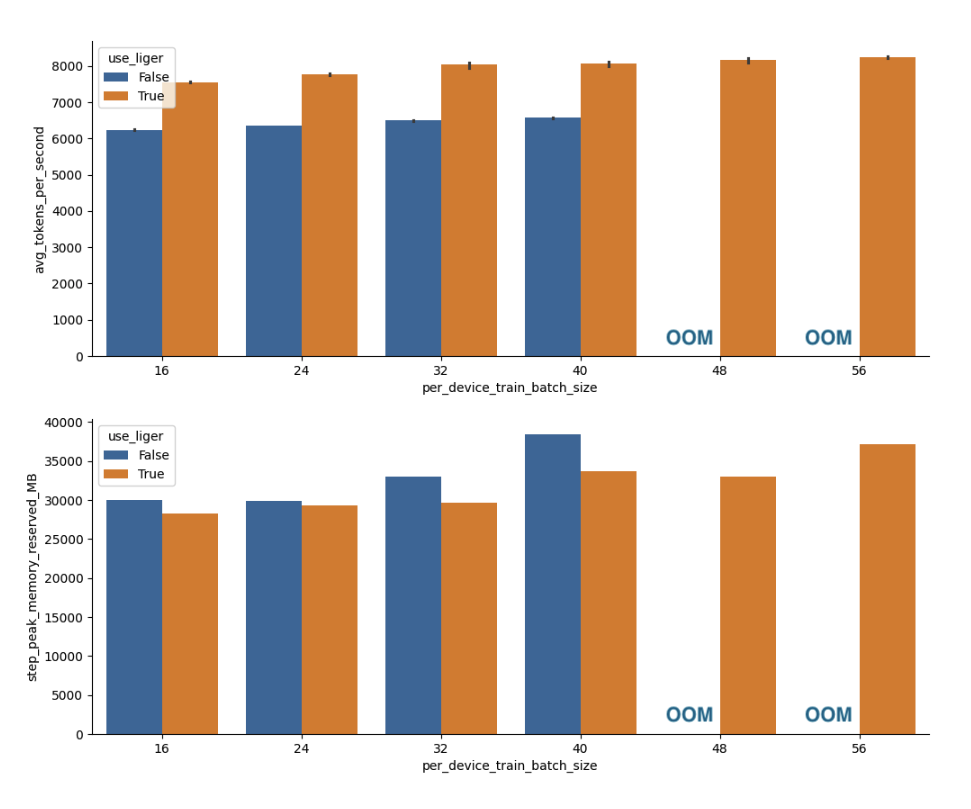

As we can see from the plot below, using Liger Kernel during training prevents out-of-memory errors in the larger batch sizes that we tested. We also found that the token throughput was 20-30% higher and the peak memory reserved was 6-13% lower when using the Liger Kernel vs. the vanilla `transformers` layers, which reproduces the findings reported by the official Liger Kernel repo.

Conclusion

In this benchmark experiment, we were able to reproduce the throughput and memory efficiency improvements reported by the original benchmark, but with a smaller model and a single A100 GPU. In addition, Union allowed us to:

- Cache the outputs of intermediate steps in our pipeline so that we didn’t have to re-run certain steps, like downloading the dataset and model from HuggingFace Hub.

- Leverage any Python framework we wanted to perform model training.

- Easily integrate with existing monitoring tools like Weights and Biases.

- Parallelize the runs of the experiment with map tasks, allowing us to control how many tasks to run concurrently and how many subtasks need to succeed to consider the map task run as successful.

- Produce rich visualizations and reports using Flyte Decks to report on the final experiment analysis.

If you’re training AI models you can access GPUs on Union serverless!

<a href="https://docs.union.ai/serverless/tutorials/language-models/liger-kernel-finetuning" target="_blank" class="button w-inline-block">Run this benchmark on Union Serverless ↗</a>