Build an Event-Driven Neural Style Transfer Application Using AWS Lambda

To build a production-ready ML application and ensure its stability in the long run, we need to take care of a long checklist of requirements which include the ease with which the models could be iterated, reproducibility, infrastructure, automation, resources, memory, and so on. On top of that, we need a seamless developer experience. How hard could it be?

Flyte can handle the former set of issues because:

- it’s a workflow automation platform that helps maintain and reproduce pipelines.

- it provides the control knobs for infrastructure, resources, and memory.

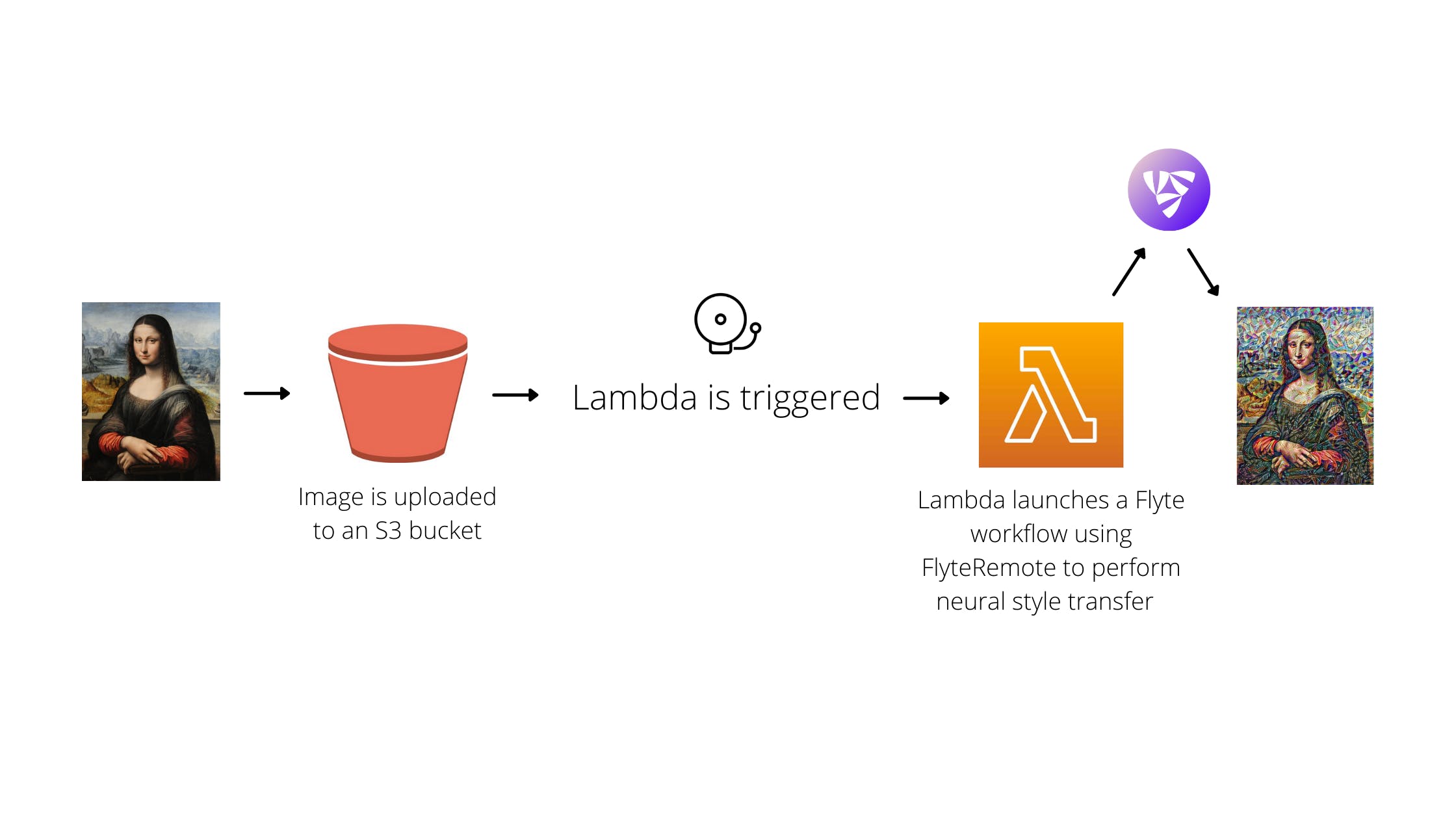

Also, Flyte simplifies the developer experience. In this blog post, we’ll see how by building a neural style transfer application using Flyte and AWS Lambda. We’ll code the end-to-end pipeline and assign the required compute to run the code. Further, we'll design an event-driven mechanism to trigger the pipeline and output a stylized image when a user uploads an image. From a user perspective, a stylized output image has to be generated upon uploading an image.

Since the application has to be triggered on an event, i.e., image upload, a more suitable choice for Flyte would be to use AWS Lambda. It is serverless and an event-driven compute service. Our neural style transfer application will leverage the “event-driven feature” of AWS Lambda.

Let’s look at how we could stitch the pipeline automation and event-driven service together using Flyte and AWS Lambda.

Application Code

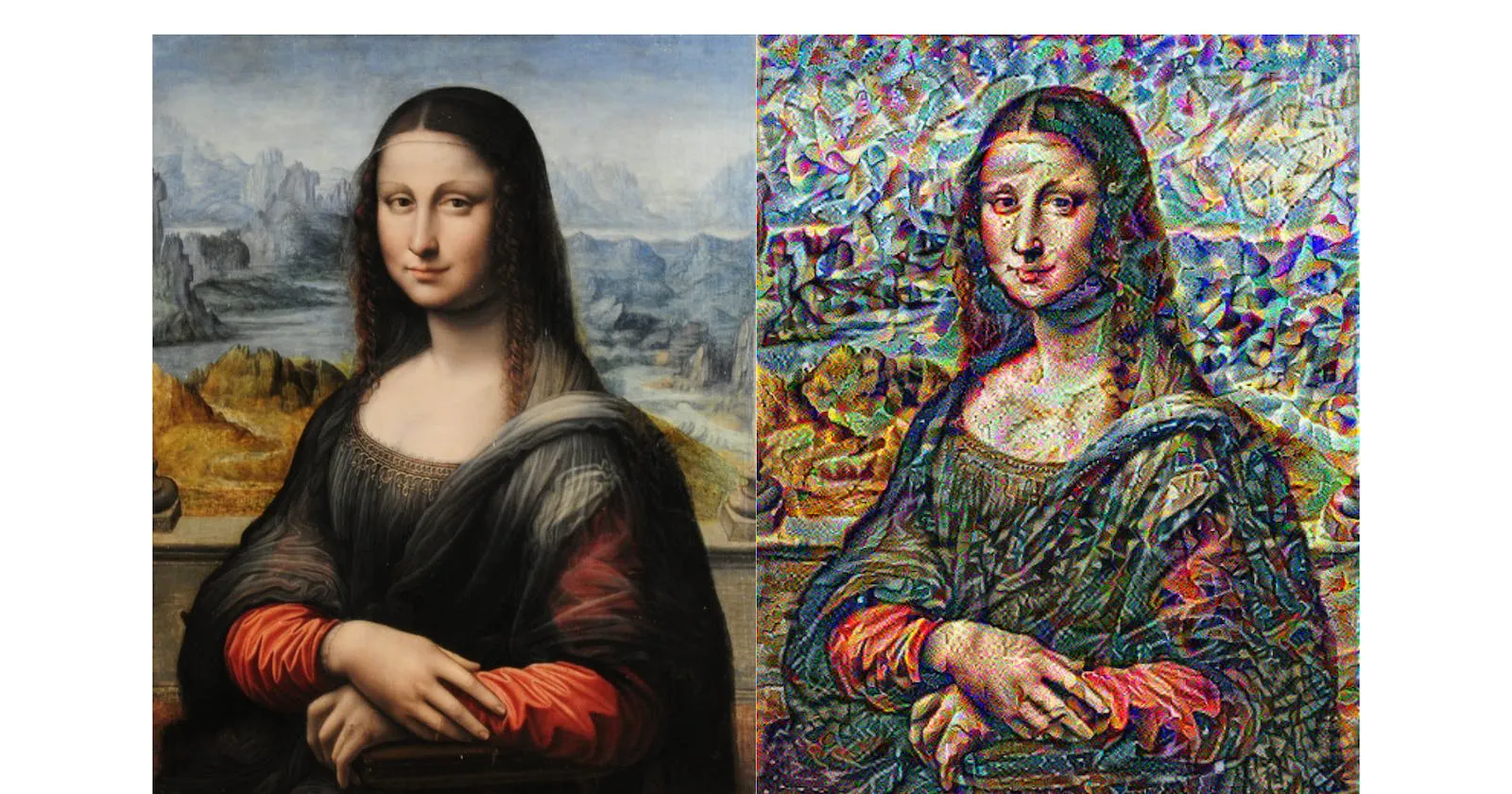

Neural style transfer is applying the style of the style image onto the content image. The output image would be a blend of the content and style images.

To get started with the code, first import and configure the dependencies.

Note: This code is an adaption of the Neural style transfer example from the TensorFlow documentation. To run the code, ensure <span class="code-inline">tensorflow</span>, <span class="code-inline">flytekit</span>, and <span class="code-inline">Pillow</span> libraries are installed through <span class="code-inline">pip</span>.

<span class="code-inline">content_layers</span> and <span class="code-inline">style_layers</span> are the layers of the VGG19 model, which we’ll use to build our model, and the <span class="code-inline">tensor_to_image</span> task converts a tensor to an image.

The first step of the model building process is to fetch the image and preprocess it. Define a <span class="code-inline">@task</span> to load the image and limit its maximum dimension to 512 pixels.

The <span class="code-inline">preprocess_img</span> task downloads the content and style image files, and resizes them using the <span class="code-inline">load_img</span> function.

With the data ready to be used by the model, define a VGG19 model that returns the style and content tensors.

The <span class="code-inline">vgg_layers</span> function returns a list of intermediate layer outputs on top of which the model is built (note that we’re using a pretrained VGG network), and the <span class="code-inline">gram_matrix</span> function literally describes the style of an image. When the model is called on an image, it returns the gram matrix of the <span class="code-inline">style_layers</span> and the content of the <span class="code-inline">content_layers</span>.

Next comes the implementation of the style transfer algorithm. Calculate the total loss (style + content) by considering the weighted combination of the two losses.

Call <span class="code-inline">style_content_loss</span> from within <span class="code-inline">tf.GradientTape</span> to update the image.

The <span class="code-inline">train_step</span> task initializes the style and content target values (tensors), computes the total variation loss, runs gradient descent, applies the processed gradients, and clips the pixel values of the image between 0 and 1. Define the <span class="code-inline">clip_0_1</span> function as follows:

Create a <span class="code-inline">@dynamic</span> workflow to trigger the <span class="code-inline">train_step</span> task for a specified number of <span class="code-inline">epochs</span> and <span class="code-inline">steps_per_epoch</span>.

<span class="code-inline">tf.Variable</span> stores the content image. When it is called from within <span class="code-inline">tf.GradientTape</span>, the <span class="code-inline">image</span>, a <span class="code-inline">tf.Variable</span> is watched and the operations are recorded for automatic differentiation.

Lastly, define a <span class="code-inline">@workflow</span> to encapsulate the tasks and generate a stylized image.

Once the pipeline is deployed, the subsequent step would be to set up the S3 bucket and configure Lambda.

Configure AWS S3 Bucket and Lambda

Images will be uploaded to the S3 bucket, and Lambda will be used to trigger the Flyte workflow as soon as an image is uploaded.

S3 Bucket

To configure the S3 bucket,

- Open the Amazon S3 console.

- Choose Buckets.

- Choose Create bucket.

- Give the bucket a name, e.g., “neural-style-transfer”.

- Choose the appropriate AWS region (make sure Lambda is created in the same AWS region).

- Block or unblock public access (this tutorial assumes that public access is granted).

- Choose Create bucket.

Lambda

A Lambda function can be created from scratch, through a blueprint, a container image, or a serverless app repository. Blueprint can be chosen to fetch sample lambda code, in our case, an S3 blueprint. However, since we need to connect to FlyteRemote from within Lambda, we have to install the flytekit library. Library installation within Lambda is possible through the zip file or container image approach.

Zip file is the easiest approach to get <span class="code-inline">flytekit</span> into Lambda, but due to the size limitations it imposes on the zip file, a much more feasible way would be to use the container image approach.

Container Image

To create a container image on your machine:

1. Create a project directory (e.g., lambda) to accommodate the lambda function.

2. Create 4 files in the directory: <span class="code-inline">lambda_function.py</span>, <span class="code-inline">Dockerfile</span>, <span class="code-inline">requirements.txt</span>, and <span class="code-inline">flyte.config</span>.

3. <span class="code-inline">lambda_function.py</span>: encapsulate the code to fetch the uploaded image, instantiate a FlyteRemote object, and trigger the Flyte workflow.

ℹ️ FlyteRemote provides a programmatic interface to interact with the Flyte backend.

Make sure to fill in the <span class="code-inline">endpoint</span>, <span class="code-inline">default_project</span> (e.g. <span class="code-inline">flytesnacks</span>), <span class="code-inline">default_domain</span> (e.g. <span class="code-inline">development</span>), and the name of the launch plan (e.g. <span class="code-inline">neural_style_transfer.example.neural_style_transfer_wf</span>).

4. flyte.config: add configuration to connect to Flyte through FlyteRemote.

Make sure to fill in or modify the configuration values. You can add “client secret” to the lambda’s environment variables, which will be explained in the Permissions section.

5. requirements.txt

6. Dockerfile: copy <span class="code-inline">lambda_function.py</span>, <span class="code-inline">flyte.config</span>, and <span class="code-inline">requirements.txt</span> to the root. Instantiate <span class="code-inline">CMD</span> to the handler that is used in the <span class="code-inline">lambda_function.py</span> file.

7. Build a Docker image in the project directory using the command:

8. Authenticate Docker CLI to the Amazon ECR registry.

Make sure to replace text in <span class="code-inline"><></span>.

9. Create a repository in the ECR.

- Open the Amazon ECR console.

- Choose Repositories.

- Choose Create repository (e.g., lambda).

10. Tag your Docker image and push the image to the newly-created repository.

Make sure to replace text in <span class="code-inline"><></span> in the registry details.

That’s it! You now have your image in the ECR.

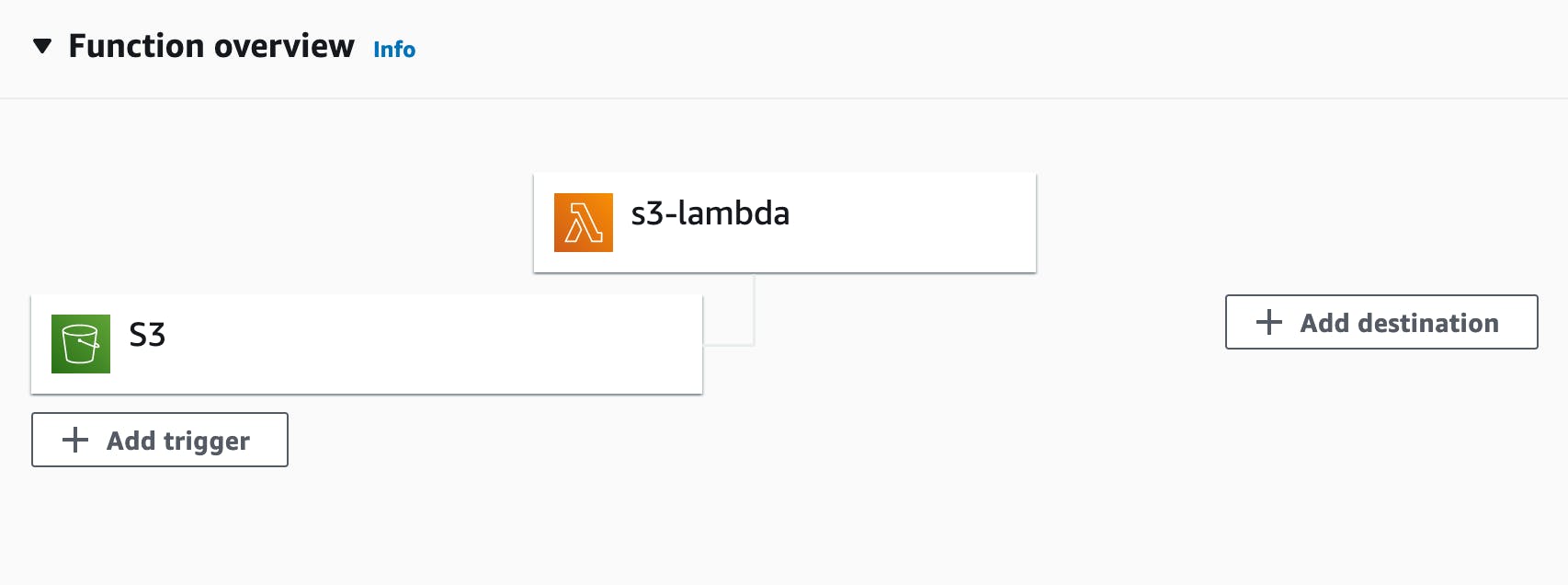

Lambda Configuration

To configure Lambda,

- Open the Functions page of the Lambda console.

- Choose Create function.

- Choose Container image.

- Enter the function name (e.g., s3-lambda).

- Give the Container Image URI (should be available in Amazon ECR console -> Repositories dashboard).

- Choose Create function.

You now have the lambda configured!

Permissions

S3 bucket and Lambda are currently separate entities. To trigger Lambda as soon as an image is uploaded to the S3 bucket, we must establish a connection between them.

Connecting them also requires setting up the required permissions. But before configuring the permissions, copy the bucket and Lambda ARNs.

Bucket ARN:

- Open the Amazon S3 console.

- Choose Buckets.

- Choose your bucket.

- Choose Properties.

- Copy the ARN.

Lambda ARN:

- Open the Functions page of the Lambda console.

- Choose Functions.

- Choose your Lambda.

- Choose Configuration and then choose Permissions.

- Click on the role in Execution role.

- Copy the ARN.

S3 Bucket

To set up permissions for the S3 bucket:

- Go to the S3 bucket you created.

- Select Permissions.

- In the Bucket policy, choose Edit.

- Add the following policy:

Make sure to fill in the Lambda execution role ARN and the S3 bucket ARN.

Lambda

To set up permissions for the Lambda:

1. Follow steps 1–4 outlined in the Lambda ARN section.

2. Under Permissions, choose Add Permissions.

3. In the dropdown, choose Create inline policy.

4. Under the JSON tab, paste the following:

Make sure to fill in the S3 bucket ARN.

5. Choose Review policy.

6. For Name, enter a name for your policy.

7. Choose Create policy.

You can add <span class="code-inline">FLYTE_CREDENTIALS_CLIENT_SECRET</span> to the lambda’s environment variables as part of initializing FlyteRemote. To do so:

- Follow steps 1-3 outlined in the Lambda ARN section.

- Choose Configuration and then choose Environment Variables.

- Set the key as <span class="code-inline">FLYTE_CREDENTIALS_CLIENT_SECRET</span>, and the value should be your secret.

Now comes the fun part — linking lambda to the S3 bucket!

Trigger

To set up the trigger:

- Follow steps 1-3 outlined in the Lambda ARN section.

- Choose Configuration and then choose Triggers.

- Click Add trigger.

- In the Select a trigger dropdown, choose S3.

- Choose your S3 bucket under Bucket.

- Choose Add.

Test the Application

To test the application, upload an image to the S3 bucket. On your Flyte console, under the neural style transfer workflow, check if the execution got triggered. The output of the execution should be your stylized image!

Next Steps

To summarize, we’ve built an event-driven application that triggers and executes an ML pipeline on the fly whenever there’s new data. It’s quite easy to productionize the pipeline with Flyte and AWS Lambda, as seen in this tutorial. We can also have a front-end application on top of this flow to make the application even more accessible.

If you want to give feedback about this tutorial or have questions regarding the implementation, please post in the comments below!