Simple is beautiful: Revolutionizing GNN Training Infrastructure at LinkedIn

During December's Flyte Community Sync, Shuying Liang shared how LinkedIn's AI platform team has developed a groundbreaking approach to managing distributed deep learning infrastructure, specifically for Graph Neural Network (GNN) training. The solution tackles complex challenges in data service orchestration through the Flyte Agent Framework.

The Challenge

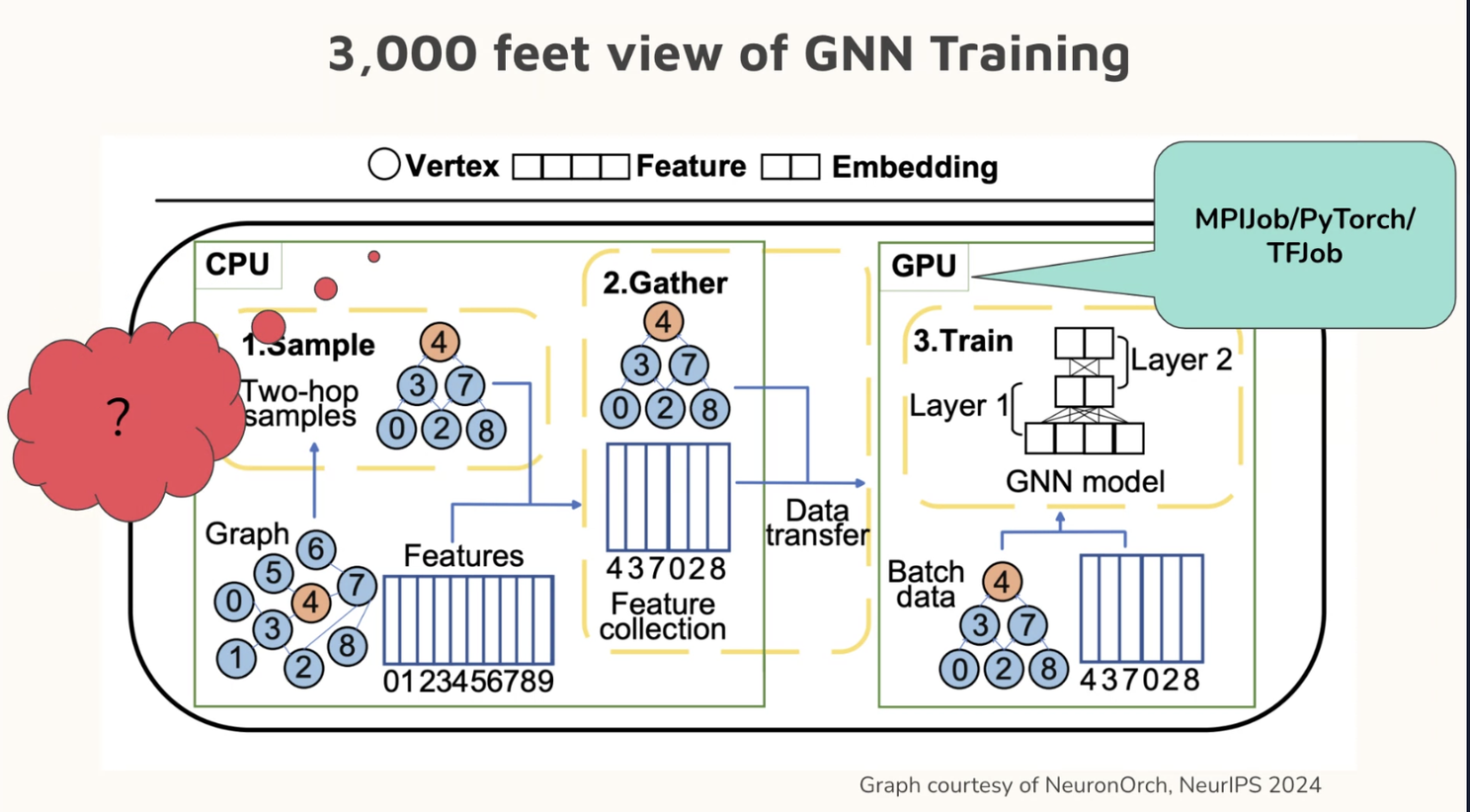

Graph Neural Networks are critical for understanding complex relationships across LinkedIn's professional networks. However, training these models at scale involves intricate data loading, sampling, and processing across multiple nodes and GPUs. The missing piece is the infrastructure to support how and where to run these Kubernetes data services, making them scalable and reliable along with the training or inference processes.

The LinkedIn Approach

LinkedIn has been migrating all their training pipelines to Flyte, leveraging the existing Flyte integrations to build a complete production AI platform.

To streamline the provisioning and scaling of datasets, LinkedIn first explored developing a Flyte backend plugin. However, they found significant advantages in the Flyte Agents framework to extend their platform with simplified testing, integration, and configuration rollout processes.

LinkedIn developed a custom Flyte Agent that:

- Decouples business logic from infrastructure complexities

- Simplifies Kubernetes orchestration

- Enables scalable, reliable data services for deep learning

- Works across multiple LinkedIn data centers in Texas and Virginia

Key Technical Approach

Rather than complicating Flyte backend plugin development with a Kubernetes operator, LinkedIn utilized the Flyte Agent Framework to:

- Harness Kubernetes-native constructs like StatefulSets and Services, to reliably provision and scale data services.

- Offer flexible task definitions for users, enhancing adaptability.

- Simplify CI/CD processes for custom agent deployment.

This approach focuses on simplified APIs and workflow management, simplifying the end-to-end user experience, with certain key elements:

Data Service Configuration

- DataServiceTask is the DSL LinkedIn created, enabling users to define infrastructure parameters such as resource requirements, number of replicas, and node execution commands

- Uses DeepGNN for graph data services and feature aggregations

Training Job Execution

- No changes to existing training job processes

- Environment variables are used to specify data service endpoints in training jobs

- Allows reusing data services across different workflows and training runs

System Architecture

- Agent framework dispatches task requests to custom agents

- Agents implement callback functions to create, retrieve, and delete Kubernetes native constructs such as StatefulSets and Services

- Backend plugin (integrated with Kubeflow) manages training pods

- Provides stable and predictable endpoints for communication between training and data services

With these elements in place, LinkedIn provides a flexible, reliable platform for productionalizing machine learning training environments with easy-to-use APIs and efficient resource management.

Future Outlook

LinkedIn plans to open-source its data service solution, contributing back to the Flyte open-source community and helping other organizations streamline their deep learning infrastructure.

Stay tuned for more details as LinkedIn prepares to share its implementation with the world.